推荐阅读:

https://docs.ceph.com/en/reef/architecture/#ceph-file-system

https://docs.ceph.com/en/reef/cephfs/#ceph-file-system

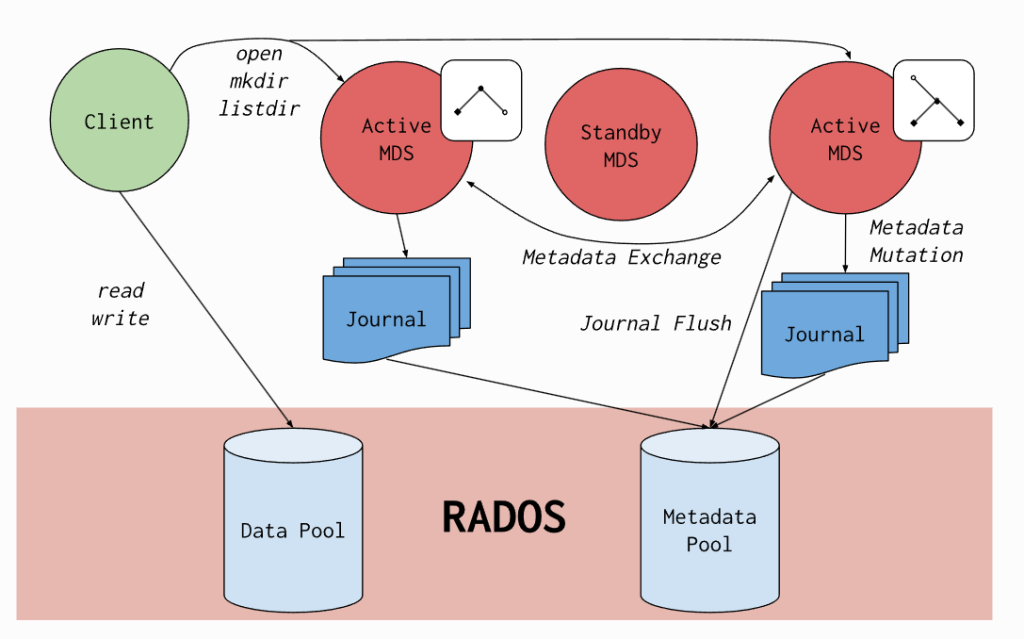

CephFS架构图

- 1.CephFS概述

RBD提供了远程磁盘挂载的问题,但无法做到多个主机共享一个磁盘,如果有一份数据很多客户端都要读写该怎么办呢?这时CephFS作为文件系统解决方案就派上用场了。

CephFS是POSIX兼容的文件系统,它直接使用Ceph存储集群来存储数据。Ceph文件系统于Ceph块设备,同时提供S3和Swift API的Ceph对象存储或者原生库(librados)的实现机制稍显不同。

如上图所示,CephFS支持内核模块或者fuse方式访问,如果宿主机没有安装ceph模块,则可以考虑使用fuse方式访问。可以通过"modinfo ceph"来检查当前宿主机是否有ceph相关内核模块。

[root@ceph141 ~]# modinfo ceph

filename: /lib/modules/5.15.0-119-generic/kernel/fs/ceph/ceph.ko

license: GPL

description: Ceph filesystem for Linux

author: Patience Warnick <patience@newdream.net>

author: Yehuda Sadeh <yehuda@hq.newdream.net>

author: Sage Weil <sage@newdream.net>

alias: fs-ceph

srcversion: 8ABA5D4086D53861D2D72D1

depends: libceph,fscache,netfs

retpoline: Y

intree: Y

name: ceph

vermagic: 5.15.0-119-generic SMP mod_unload modversions

sig_id: PKCS#7

signer: Build time autogenerated kernel key

sig_key: 5F:22:FC:96:FA:F2:9E:F0:76:8D:52:81:4A:3C:6F:F8:61:08:2C:6D

sig_hashalgo: sha512

signature: 76:9E:6E:70:EF:E0:C3:A7:5F:24:C7:C1:2D:EC:24:B9:EE:84:17:64:

F3:18:EC:8F:59:C3:88:64:96:56:1C:FE:97:CE:91:36:C9:BF:98:4B:

A7:F1:63:A5:C8:31:23:5F:12:43:4F:D7:C0:D9:E7:97:72:33:4D:DA:

04:43:60:F9:91:E7:CD:BC:45:30:E4:5A:E0:1B:20:B3:8B:4A:FD:6D:

9F:C6:B8:75:BF:F4:AD:1B:E4:86:FE:85:34:CA:97:DE:17:45:D4:00:

D2:AA:71:3D:18:84:36:D5:33:31:7F:02:8B:1A:26:49:A2:B3:C0:02:

C8:9A:F6:02:7F:B3:82:9C:83:29:3B:F6:ED:E4:BA:BB:0D:F9:E8:27:

04:05:39:29:C8:33:BD:35:8B:77:29:48:89:8F:E9:DD:55:89:3D:CF:

2D:B8:E7:17:8E:20:F6:EE:7D:5B:6D:9A:92:A4:ED:6A:9D:9B:83:1E:

6B:A1:CA:70:28:43:BB:49:88:12:39:65:01:49:2F:0A:4B:D6:9F:26:

EB:D6:E5:FD:35:85:85:E6:CB:E2:87:D1:D3:9D:C8:8F:23:10:DC:D1:

C2:F0:D8:A7:AF:F6:1A:FD:0A:7C:40:1F:40:7B:08:4F:1A:8F:A9:45:

CC:99:57:DB:5E:35:F1:16:49:3A:1B:EF:B4:E6:4B:95:B5:56:A4:D1:

7E:5E:48:10:EE:BC:5C:1A:A7:B0:F1:2E:20:33:5B:55:24:CA:05:A6:

6B:16:20:AE:24:6A:4E:97:1B:81:E0:A1:03:E0:A9:C2:BD:05:36:E6:

09:33:F5:E5:D6:C2:17:CE:28:20:27:59:61:C3:18:06:DB:CC:0F:90:

81:AC:99:26:E1:93:CE:CF:A5:94:56:41:D9:32:C7:6F:FE:F1:8B:9D:

51:4B:82:FC:8B:90:21:B7:96:94:38:4C:DF:EC:0F:85:E5:90:3B:40:

C7:E3:04:7F:D9:F2:81:43:8C:E9:57:18:88:06:B5:3A:F9:E5:A3:01:

52:BD:E7:36:AF:D5:BD:64:16:C2:2A:3D:61:7B:10:09:35:91:C4:5D:

B6:0A:D0:22:2F:8E:A7:E7:48:EE:27:BA:86:E9:BA:96:27:55:E0:F5:

51:36:B7:B0:11:D6:50:6E:85:92:03:DA:95:0E:92:26:A2:7B:5F:AD:

19:C2:A2:CD:71:F3:D3:72:91:8C:79:3D:85:3B:75:C0:AA:81:0F:93:

49:6E:21:F5:38:F5:03:69:17:75:36:04:A8:81:6A:03:2E:7D:0D:10:

81:24:81:4E:78:93:08:8C:1E:CC:BA:5B:47:3A:08:40:A5:CE:4F:3A:

33:DF:A5:1D:4F:8F:0A:62:2A:A3:62:4B

parm: disable_send_metrics:Enable sending perf metrics to ceph cluster (default: on)

[root@ceph141 ~]# - 2.CephFS架构原理

CephFS需要至少运行一个元数据服务器(MDS)守护进程(ceph-mds),此进程管理与CephFS上存储文件相关的元数据信息。

MDS虽然称为元数据服务,但是它却不存储任何元数据信息,它存在的目的仅仅是让我们rados集群提供存储接口。

客户端在访问文件接口时,首先链接到MDS上,在MDS到内存里面维持元数据的索引信息,从而间接找到去哪个数据节点读取数据。这一点和HDFS文件系统类似。- 3.CephFS和NFS对比

相较于NFS来说,它主要有以下特点优势:

- 1.底层数据冗余的功能,底层的roados提供了基本数据冗余功能,因此不存在NFS的单点故障因素;

- 2.底层roados系统有N个存储节点组成,所以数据的存储可以分散I/O,吞吐量较高;

- 3.底层roados系统有N个存储节点组成,所以ceph提供的扩展性要相当的高;cephFS的一主一从架构部署

推荐阅读:

https://docs.ceph.com/en/reef/cephfs/createfs/

- 1.创建两个存储池分别用于存储mds的元数据和数据

[root@ceph141 ~]# ceph -s

cluster:

id: 11e66474-0e02-11f0-82d6-4dcae3d59070

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph141,ceph142,ceph143 (age 41h)

mgr: ceph141.mbakds(active, since 41h), standbys: ceph142.qgifwo

osd: 9 osds: 9 up (since 41h), 9 in (since 41h)

data:

pools: 3 pools, 25 pgs

objects: 307 objects, 644 MiB

usage: 2.8 GiB used, 5.3 TiB / 5.3 TiB avail

pgs: 25 active+clean

[root@ceph141 ~]#

[root@ceph141 ~]# ceph osd pool create cephfs_data

pool 'cephfs_data' created

[root@ceph141 ~]#

[root@ceph141 ~]# ceph osd pool create cephfs_metadata

pool 'cephfs_metadata' created

[root@ceph141 ~]#

[root@ceph141 ~]# ceph osd pool ls detail | grep ceph

pool 12 'cephfs_data' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 32 pgp_num 32 autoscale_mode on last_change 431 lfor 0/0/429 flags hashpspool stripe_width 0 read_balance_score 2.25

pool 13 'cephfs_metadata' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 32 pgp_num 32 autoscale_mode on last_change 431 lfor 0/0/429 flags hashpspool stripe_width 0 read_balance_score 3.09

[root@ceph141 ~]# - 2.创建一个文件系统,名称为”violet-cephfs”

[root@ceph141 ~]# ceph fs new violet-cephfs cephfs_metadata cephfs_data

Pool 'cephfs_data' (id '12') has pg autoscale mode 'on' but is not marked as bulk.

Consider setting the flag by running

# ceph osd pool set cephfs_data bulk true

new fs with metadata pool 13 and data pool 12

[root@ceph141 ~]#

[root@ceph141 ~]#

[root@ceph141 ~]# ceph osd pool ls detail | grep ceph

pool 12 'cephfs_data' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 32 pgp_num 32 autoscale_mode on last_change 435 lfor 0/0/429 flags hashpspool stripe_width 0 application cephfs read_balance_score 2.25

pool 13 'cephfs_metadata' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 32 pgp_num 32 autoscale_mode on last_change 435 lfor 0/0/429 flags hashpspool stripe_width 0 pg_autoscale_bias 4 pg_num_min 16 recovery_priority 5 application cephfs read_balance_score 3.09

[root@ceph141 ~]#

[root@ceph141 ~]# ceph osd pool get cephfs_data bulk

bulk: false

[root@ceph141 ~]#

[root@ceph141 ~]# ceph osd pool set cephfs_data bulk true # 标记'cephfs_data'存储池为大容量

set pool 12 bulk to true

[root@ceph141 ~]#

[root@ceph141 ~]# ceph osd pool get cephfs_data bulk

bulk: true

[root@ceph141 ~]#

[root@ceph141 ~]# ceph osd pool ls detail | grep ceph # 注意观察pg的数量

pool 12 'cephfs_data' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 256 pgp_num 256 autoscale_mode on last_change 444 lfor 0/0/442 flags hashpspool,bulk stripe_width 0 application cephfs read_balance_score 1.55

pool 13 'cephfs_metadata' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 32 pgp_num 32 autoscale_mode on last_change 435 lfor 0/0/429 flags hashpspool stripe_width 0 pg_autoscale_bias 4 pg_num_min 16 recovery_priority 5 application cephfs read_balance_score 3.09

[root@ceph141 ~]# - 3.查看创建的文件系统

[root@ceph141 ~]# ceph fs ls

name: violet-cephfs, metadata pool: cephfs_metadata, data pools: [cephfs_data ]

[root@ceph141 ~]#

[root@ceph141 ~]# ceph mds stat

oldboyedu-cephfs:0

[root@ceph141 ~]#

[root@ceph141 ~]# ceph -s

cluster:

id: 11e66474-0e02-11f0-82d6-4dcae3d59070

health: HEALTH_ERR

1 filesystem is offline

1 filesystem is online with fewer MDS than max_mds

services:

mon: 3 daemons, quorum ceph141,ceph142,ceph143 (age 41h)

mgr: ceph141.mbakds(active, since 41h), standbys: ceph142.qgifwo

mds: 0/0 daemons up

osd: 9 osds: 9 up (since 41h), 9 in (since 41h)

data:

volumes: 1/1 healthy

pools: 5 pools, 313 pgs

objects: 307 objects, 645 MiB

usage: 2.9 GiB used, 5.3 TiB / 5.3 TiB avail

pgs: 313 active+clean

[root@ceph141 ~]# - 4.应用mds的文件系统

[root@ceph141 ~]# ceph orch apply mds oldboyedu-cephfs

Scheduled mds.violet-cephfs update...

[root@ceph141 ~]#

[root@ceph141 ~]# ceph fs ls

name: violet-cephfs, metadata pool: cephfs_metadata, data pools: [cephfs_data ]

[root@ceph141 ~]#

[root@ceph141 ~]# ceph mds stat

oldboyedu-cephfs:1 {0=violet-cephfs.ceph142.pmzglk=up:active} 1 up:standby

[root@ceph141 ~]#

[root@ceph141 ~]# ceph -s

cluster:

id: 11e66474-0e02-11f0-82d6-4dcae3d59070

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph141,ceph142,ceph143 (age 41h)

mgr: ceph141.mbakds(active, since 41h), standbys: ceph142.qgifwo

mds: 1/1 daemons up, 1 standby

osd: 9 osds: 9 up (since 41h), 9 in (since 42h)

data:

volumes: 1/1 healthy

pools: 5 pools, 313 pgs

objects: 329 objects, 645 MiB

usage: 2.9 GiB used, 5.3 TiB / 5.3 TiB avail

pgs: 313 active+clean

io:

client: 5.8 KiB/s rd, 0 B/s wr, 5 op/s rd, 0 op/s wr

[root@ceph141 ~]# - 5.查看cephFS集群的详细信息

[root@ceph141 ~]# ceph fs status violet-cephfs # 不难发现目前活跃提供服务是ceph142,备用的是ceph143

violet-cephfs - 0 clients

================

RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS

0 active violet-cephfs.ceph142.pmzglk Reqs: 0 /s 10 13 12 0

POOL TYPE USED AVAIL

cephfs_metadata metadata 96.0k 1730G

cephfs_data data 0 1730G

STANDBY MDS

violet-cephfs.ceph143.scwesv

MDS version: ceph version 19.2.1 (58a7fab8be0a062d730ad7da874972fd3fba59fb) squid (stable)

[root@ceph141 ~]# - 6.添加多个mds服务器

[root@ceph141 ~]# ceph orch daemon add mds violet-cephfs ceph141

Deployed mds.violet-cephfs.ceph141.pthitg on host 'ceph141'

[root@ceph141 ~]#

[root@ceph141 ~]# ceph fs status violet-cephfs

violet-cephfs - 0 clients

================

RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS

0 active violet-cephfs.ceph142.pmzglk Reqs: 0 /s 10 13 12 0

POOL TYPE USED AVAIL

cephfs_metadata metadata 96.0k 1730G

cephfs_data data 0 1730G

STANDBY MDS

oldboyedu-cephfs.ceph141.pthitg

MDS version: ceph version 19.2.1 (58a7fab8be0a062d730ad7da874972fd3fba59fb) squid (stable)

[root@ceph141 ~]#

[root@ceph141 ~]# ceph -s

cluster:

id: 11e66474-0e02-11f0-82d6-4dcae3d59070

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph141,ceph142,ceph143 (age 41h)

mgr: ceph141.mbakds(active, since 41h), standbys: ceph142.qgifwo

mds: 1/1 daemons up, 1 standby

osd: 9 osds: 9 up (since 41h), 9 in (since 42h)

data:

volumes: 1/1 healthy

pools: 5 pools, 313 pgs

objects: 329 objects, 645 MiB

usage: 2.9 GiB used, 5.3 TiB / 5.3 TiB avail

pgs: 313 active+clean

[root@ceph141 ~]# cephFS的一主一从架构高可用验证

- 1 查看cephFS集群的详细信息

[root@ceph141 ~]# ceph fs status violet-cephfs

violet-cephfs - 0 clients

================

RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS

0 active violet-cephfs.ceph142.pmzglk Reqs: 0 /s 10 13 12 0

POOL TYPE USED AVAIL

cephfs_metadata metadata 96.0k 1730G

cephfs_data data 0 1730G

STANDBY MDS

violet-cephfs.ceph141.pthitg

MDS version: ceph version 19.2.1 (58a7fab8be0a062d730ad7da874972fd3fba59fb) squid (stable)

[root@ceph141 ~]# - ceph142直接关机

[root@ceph142 ~]# init 0- 3 查看cephFS集群状态【需要等待30s左右才能看到效果】

[root@ceph141 ~]# ceph fs status violet-cephfs

violet-cephfs - 0 clients

================

RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS

0 replay violet-cephfs.ceph141.pthitg 0 0 0 0

POOL TYPE USED AVAIL

cephfs_metadata metadata 96.0k 2595G

cephfs_data data 0 1730G

MDS version: ceph version 19.2.1 (58a7fab8be0a062d730ad7da874972fd3fba59fb) squid (stable)

[root@ceph141 ~]#

[root@ceph141 ~]#

[root@ceph141 ~]# ceph fs status violet-cephfs

oldboyedu-cephfs - 0 clients

================

RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS

0 active violet-cephfs.ceph141.pthitg Reqs: 0 /s 10 13 12 0

POOL TYPE USED AVAIL

cephfs_metadata metadata 96.0k 2595G

cephfs_data data 0 1730G

MDS version: ceph version 19.2.1 (58a7fab8be0a062d730ad7da874972fd3fba59fb) squid (stable)

[root@ceph141 ~]# - 4.查看集群状态

[root@ceph141 ~]# ceph -s

cluster:

id: 11e66474-0e02-11f0-82d6-4dcae3d59070

health: HEALTH_WARN

insufficient standby MDS daemons available

1/3 mons down, quorum ceph141,ceph143

3 osds down

1 host (3 osds) down

Degraded data redundancy: 331/993 objects degraded (33.333%), 29 pgs degraded, 313 pgs undersized

services:

mon: 3 daemons, quorum ceph141,ceph143 (age 2m), out of quorum: ceph142

mgr: ceph141.mbakds(active, since 44h)

mds: 1/1 daemons up

osd: 9 osds: 6 up (since 2m), 9 in (since 44h)

data:

volumes: 1/1 healthy

pools: 5 pools, 313 pgs

objects: 331 objects, 655 MiB

usage: 2.9 GiB used, 5.3 TiB / 5.3 TiB avail

pgs: 331/993 objects degraded (33.333%)

284 active+undersized

29 active+undersized+degraded

io:

client: 12 KiB/s wr, 0 op/s rd, 0 op/s wr

[root@ceph141 ~]# - 5.重启启动ceph142节点后,再次观察集群状态

[root@ceph141 ~]# ceph -s

cluster:

id: 11e66474-0e02-11f0-82d6-4dcae3d59070

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph141,ceph142,ceph143 (age 26s)

mgr: ceph141.mbakds(active, since 44h)

mds: 1/1 daemons up, 1 standby

osd: 9 osds: 9 up (since 9s), 9 in (since 44h)

data:

volumes: 1/1 healthy

pools: 5 pools, 313 pgs

objects: 331 objects, 655 MiB

usage: 2.6 GiB used, 5.3 TiB / 5.3 TiB avail

pgs: 313 active+clean

io:

client: 3.9 KiB/s wr, 0 op/s rd, 0 op/s wr

recovery: 26 KiB/s, 0 objects/s

[root@ceph141 ~]#

[root@ceph141 ~]#

[root@ceph141 ~]# ceph fs status violet-cephfs

oldboyedu-cephfs - 0 clients

================

RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS

0 active oldboyedu-cephfs.ceph141.pthitg Reqs: 0 /s 10 13 12 0

POOL TYPE USED AVAIL

cephfs_metadata metadata 106k 1730G

cephfs_data data 0 1730G

STANDBY MDS

violet-cephfs.ceph142.pmzglk

MDS version: ceph version 19.2.1 (58a7fab8be0a062d730ad7da874972fd3fba59fb) squid (stable)

[root@ceph141 ~]# cephfs的客户端之借助内核ceph模块(与是否安装ceph-common无关)挂载

- 1.管理节点创建用户并导出钥匙环和key文件

1.1 创建用户并授权

[root@ceph141 ~]# ceph auth add client.linux mon 'allow r' mds 'allow rw' osd 'allow rwx'

added key for client.linux

[root@ceph141 ~]#

[root@ceph141 ~]# ceph auth get client.linux

[client.linux96]

key = AQCz2+xnI9UVHxAAlU1w/7Yp5MGhya4CwyP0tA==

caps mds = "allow rw"

caps mon = "allow r"

caps osd = "allow rwx"

[root@ceph141 ~]#

1.2 导出认证信息

[root@ceph141 ~]# ceph auth get client.linux > ceph.client.linux.keyring

[root@ceph141 ~]#

[root@ceph141 ~]# cat ceph.client.linux.keyring

[client.linux96]

key = AQCz2+xnI9UVHxAAlU1w/7Yp5MGhya4CwyP0tA==

caps mds = "allow rw"

caps mon = "allow r"

caps osd = "allow rwx"

[root@ceph141 ~]#

[root@ceph141 ~]# ceph auth print-key client.linux > linux.key

[root@ceph141 ~]#

[root@ceph141 ~]# cat linux.key ;echo

AQCz2+xnI9UVHxAAlU1w/7Yp5MGhya4CwyP0tA==

[root@ceph141 ~]#

1.3.将钥匙环和秘钥key拷贝到客户端指定目录

[root@ceph141 ~]# scp ceph.client.linux.keyring 10.0.0.31:/etc/ceph

[root@ceph141 ~]# scp linux.key 10.0.0.93:/etc/ceph- 2.基于KEY进行挂载,无需拷贝秘钥文件!

2.1 查看本地文件

[root@prometheus-server31 ~]# ll /etc/ceph/

total 24

drwxr-xr-x 2 root root 4096 Apr 2 14:43 ./

drwxr-xr-x 132 root root 12288 Apr 2 06:39 ../

-rw-r--r-- 1 root root 134 Apr 2 14:43 ceph.client.linux.keyring # 可以删除,但是在19.2.1版本中挂载时会提示缺失文件,但不影响挂载。

-rw-r--r-- 1 root root 92 Dec 18 22:48 rbdmap

[root@prometheus-server31 ~]#

2.2 常见挂载点

[root@prometheus-server31 ~]# mkdir /data

[root@prometheus-server31 ~]# mount -t ceph ceph141:6789,ceph142:6789,ceph143:6789:/ /data -o name=linux,secret=AQCz2+xnI9UVHxAAlU1w/7Yp5MGhya4CwyP0tA==

[root@prometheus-server31 ~]#

[root@prometheus-server31 ~]# df -h | grep 6789

10.0.0.141:6789,10.0.0.142:6789,10.0.0.143:6789:/ 1.7T 0 1.7T 0% /data

[root@prometheus-server31 ~]#

2.3 尝试写入测试数据

[root@prometheus-server31 ~]# ll /data/

total 4

drwxr-xr-x 2 root root 0 Apr 2 12:00 ./

drwxr-xr-x 22 root root 4096 Apr 2 14:45 ../

[root@prometheus-server31 ~]#

[root@prometheus-server31 ~]# cp /etc/os-release /etc/fstab /data/

[root@prometheus-server31 ~]#

[root@prometheus-server31 ~]# ll /data/

total 6

drwxr-xr-x 2 root root 2 Apr 2 14:54 ./

drwxr-xr-x 22 root root 4096 Apr 2 14:45 ../

-rw-r--r-- 1 root root 657 Apr 2 14:54 fstab

-rw-r--r-- 1 root root 427 Apr 2 14:54 os-release

[root@prometheus-server31 ~]#

[root@prometheus-server31 ~]# - 3.基于secretfile进行挂载

3.1 查看本地文件

[root@elk93 ~]# ll /etc/ceph/

total 24

drwxr-xr-x 2 root root 4096 Apr 2 14:43 ./

drwxr-xr-x 143 root root 12288 Apr 2 06:12 ../

-rw-r--r-- 1 root root 40 Apr 2 14:43 linux.key

-rw-r--r-- 1 root root 92 Dec 18 22:48 rbdmap

[root@elk93 ~]#

3.2 查看本地解析记录

[root@elk93 ~]# cat >> /etc/hosts <<EOF

10.0.0.141 ceph141

10.0.0.142 ceph142

10.0.0.143 ceph143

EOF

3.3 基于key文件进行挂载并尝试写入数据

[root@elk93 ~]# mount -t ceph ceph141:6789,ceph142:6789,ceph143:6789:/ /data -o name=linux,secretfile=/etc/ceph/linux.key # 挂载时会警告可以忽略

did not load config file, using default settings.

2025-04-02T14:55:47.175+0800 7f214eef7f40 -1 Errors while parsing config file!

2025-04-02T14:55:47.175+0800 7f214eef7f40 -1 can't open ceph.conf: (2) No such file or directory

2025-04-02T14:55:47.175+0800 7f214eef7f40 -1 Errors while parsing config file!

2025-04-02T14:55:47.175+0800 7f214eef7f40 -1 can't open ceph.conf: (2) No such file or directory

unable to get monitor info from DNS SRV with service name: ceph-mon

2025-04-02T14:55:47.199+0800 7f214eef7f40 -1 failed for service _ceph-mon._tcp

2025-04-02T14:55:47.199+0800 7f214eef7f40 -1 auth: unable to find a keyring on /etc/ceph/ceph.client.linux.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin: (2) No such file or directory

[root@elk93 ~]#

[root@elk93 ~]# df -h | grep 6789

10.0.0.141:6789,10.0.0.142:6789,10.0.0.143:6789:/ 1.7T 0 1.7T 0% /data

[root@elk93 ~]#

3.4 写测试数据

[root@elk93 ~]# ll /data/

total 6

drwxr-xr-x 2 root root 2 Apr 2 14:54 ./

drwxr-xr-x 23 root root 4096 Apr 2 14:55 ../

-rw-r--r-- 1 root root 657 Apr 2 14:54 fstab

-rw-r--r-- 1 root root 427 Apr 2 14:54 os-release

[root@elk93 ~]#

[root@elk93 ~]# cp /etc/passwd /data/

[root@elk93 ~]#

[root@elk93 ~]# ll /data/

total 9

drwxr-xr-x 2 root root 3 Apr 2 14:56 ./

drwxr-xr-x 23 root root 4096 Apr 2 14:55 ../

-rw-r--r-- 1 root root 657 Apr 2 14:54 fstab

-rw-r--r-- 1 root root 427 Apr 2 14:54 os-release

-rw-r--r-- 1 root root 2941 Apr 2 14:56 passwd

[root@elk93 ~]#

3.5 再次查看31节点,发现数据是同步的

[root@prometheus-server31 ~]# ll /data/

total 9

drwxr-xr-x 2 root root 3 Apr 2 14:56 ./

drwxr-xr-x 22 root root 4096 Apr 2 14:45 ../

-rw-r--r-- 1 root root 657 Apr 2 14:54 fstab

-rw-r--r-- 1 root root 427 Apr 2 14:54 os-release

-rw-r--r-- 1 root root 2941 Apr 2 14:56 passwd

[root@prometheus-server31 ~]# cephfs的客户端之基于用户空间fuse方式访问

- 1.FUSE概述

对于某些操作系统来说,它没有提供对应的ceph内核模块,我们还需要使用CephFS的话,可以通过FUSE方式来实现。

FUSE英文全称为:"Filesystem in Userspace",用于非特权用户能够无需操作内核而创建文件系统,但需要单独安装"ceph-fuse"程序包。- 2.安装ceph-fuse程序包

[root@elk92 ~]# apt -y install ceph-fuse - 3.创建挂载点

[root@elk92 ~]# mkdir -pv /violet/cephfs- 4.拷贝认证文件

[root@elk92 ~]# mkdir /etc/ceph

[root@elk92 ~]# scp 10.0.0.141:/etc/ceph/ceph.client.admin.keyring /etc/ceph

[root@elk92 ~]# ll /etc/ceph

total 20

drwxr-xr-x 2 root root 4096 Apr 2 15:23 ./

drwxr-xr-x 139 root root 12288 Apr 2 15:23 ../

-rw------- 1 root root 151 Apr 2 15:23 ceph.client.admin.keyring

[root@elk92 ~]# - 5.使用ceph-fuse工具挂载cephFS

[root@elk92 ~]# ceph-fuse -n client.admin -m 10.0.0.141:6789,10.0.0.142:6789,10.0.0.143:6789 /violet/cephfs/

did not load config file, using default settings.

2025-04-02T15:25:32.212+0800 7fe0a00453c0 -1 Errors while parsing config file!

2025-04-02T15:25:32.212+0800 7fe0a00453c0 -1 can't open ceph.conf: (2) No such file or directory

2025-04-02T15:25:32.236+0800 7fe0a00453c0 -1 Errors while parsing config file!

2025-04-02T15:25:32.236+0800 7fe0a00453c0 -1 can't open ceph.conf: (2) No such file or directory

ceph-fuse[61510]: starting ceph client

2025-04-02T15:25:32.236+0800 7fe0a00453c0 -1 init, newargv = 0x5637a031e4d0 newargc=13

2025-04-02T15:25:32.236+0800 7fe0a00453c0 -1 init, args.argv = 0x5637a031e620 args.argc=4

ceph-fuse[61510]: starting fuse

[root@elk92 ~]#

[root@elk92 ~]# df -h | grep cephfs

ceph-fuse 1.7T 0 1.7T 0% /violet/cephfs

[root@elk92 ~]# - 6.写入测试数据

[root@elk92 ~]# ll /violet/cephfs/

total 9

drwxr-xr-x 2 root root 4025 Apr 2 14:56 ./

drwxr-xr-x 3 root root 4096 Apr 2 15:23 ../

-rw-r--r-- 1 root root 657 Apr 2 14:54 fstab

-rw-r--r-- 1 root root 427 Apr 2 14:54 os-release

-rw-r--r-- 1 root root 2941 Apr 2 14:56 passwd

[root@elk92 ~]#

[root@elk92 ~]# rm -f /violet/cephfs/fstab

[root@elk92 ~]#

[root@elk92 ~]# cp /etc/shadow /violet/cephfs/

[root@elk92 ~]#

[root@elk92 ~]# ll /violet/cephfs/

total 10

drwxr-xr-x 2 root root 3368 Apr 2 15:26 ./

drwxr-xr-x 3 root root 4096 Apr 2 15:23 ../

-rw-r--r-- 1 root root 427 Apr 2 14:54 os-release

-rw-r--r-- 1 root root 2941 Apr 2 14:56 passwd

-rw-r----- 1 root root 1535 Apr 2 15:26 shadow

[root@elk92 ~]# - 7.其他节点查看数据是否存在

[root@prometheus-server31 ~]# ll /data/

total 9

drwxr-xr-x 2 root root 3 Apr 2 15:26 ./

drwxr-xr-x 22 root root 4096 Apr 2 14:45 ../

-rw-r--r-- 1 root root 427 Apr 2 14:54 os-release

-rw-r--r-- 1 root root 2941 Apr 2 14:56 passwd

-rw-r----- 1 root root 1535 Apr 2 15:26 shadow

[root@prometheus-server31 ~]# cephFS两种方式开机自动挂载实战

- 1.基于rc.local脚本方式开机自动挂载【推荐】

1.1 修改启动脚本

[root@prometheus-server31 ~]# cat /etc/rc.local

#!/bin/bash

mount -t ceph ceph141:6789,ceph142:6789,ceph143:6789:/ /data -o name=linux,secret=AQCz2+xnI9UVHxAAlU1w/7Yp5MGhya4CwyP0tA==

[root@prometheus-server31 ~]#

1.2 重启服务器

[root@prometheus-server31 ~]# reboot

1.3 测试验证

[root@prometheus-server31 ~]# df -h | grep data

10.0.0.141:6789,10.0.0.142:6789,10.0.0.143:6789:/ 1.7T 0 1.7T 0% /data

[root@prometheus-server31 ~]#

[root@prometheus-server31 ~]# ll /data/

total 9

drwxr-xr-x 2 root root 3 Apr 2 15:26 ./

drwxr-xr-x 22 root root 4096 Apr 2 14:45 ../

-rw-r--r-- 1 root root 427 Apr 2 14:54 os-release

-rw-r--r-- 1 root root 2941 Apr 2 14:56 passwd

-rw-r----- 1 root root 1535 Apr 2 15:26 shadow

[root@prometheus-server31 ~]# - 2.基于fstab文件进行开机自动挂载

2.1 修改fstab的配置文件

[root@elk93 ~]# tail -1 /etc/fstab

ceph141:6789,ceph142:6789,ceph143:6789:/ /data ceph name=linux,secretfile=/etc/ceph/linux.key,noatime,_netdev 0 2

[root@elk93 ~]#

2.2 重启系统

[root@elk93 ~]# reboot

2.3 测试验证

[root@elk93 ~]# df -h | grep data

10.0.0.141:6789,10.0.0.142:6789,10.0.0.143:6789:/ 1.7T 0 1.7T 0% /data

[root@elk93 ~]#

[root@elk93 ~]# ll /data/

total 9

drwxr-xr-x 2 root root 3 Apr 2 15:26 ./

drwxr-xr-x 23 root root 4096 Apr 2 14:55 ../

-rw-r--r-- 1 root root 427 Apr 2 14:54 os-release

-rw-r--r-- 1 root root 2941 Apr 2 14:56 passwd

-rw-r----- 1 root root 1535 Apr 2 15:26 shadow

[root@elk93 ~]#

[root@elk93 ~]# Docker结合ceph项目实战案例

- 1.服务端创建rbd块设备

[root@ceph141 ~]# rbd create -s 200G oldboyedu/docker

[root@ceph141 ~]#

[root@ceph141 ~]# rdb info oldboyedu/docker

Command 'rdb' not found, but there are 24 similar ones.

[root@ceph141 ~]#

[root@ceph141 ~]#

[root@ceph141 ~]# rbd info oldboyedu/docker

rbd image 'docker':

size 200 GiB in 51200 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: dfd78426b738

block_name_prefix: rbd_data.dfd78426b738

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

op_features:

flags:

create_timestamp: Wed Apr 2 16:16:49 2025

access_timestamp: Wed Apr 2 16:16:49 2025

modify_timestamp: Wed Apr 2 16:16:49 2025

[root@ceph141 ~]# - 2.Docker客户端配置开机自动挂载

[root@elk92 ~]# df -h | grep ubuntu--vg-ubuntu

/dev/mapper/ubuntu--vg-ubuntu--lv 97G 41G 51G 45% /

[root@elk92 ~]#

[root@elk92 ~]#

[root@elk92 ~]# mkdir /violet/data/docker

[root@elk92 ~]#

[root@elk92 ~]# apt -y install ceph-common- 3.拷贝认证文件

[root@ceph141 ~]# scp /etc/ceph/ceph.{client.admin.keyring,conf} 10.0.0.92:/etc/ceph- 4.添加映射

[root@elk92 ~]# rbd map violet/docker

/dev/rbd0

[root@elk92 ~]#

[root@elk92 ~]# rbd showmapped

id pool namespace image snap device

0 violet docker - /dev/rbd0

[root@elk92 ~]#

[root@elk92 ~]# fdisk -l /dev/rbd0

Disk /dev/rbd0: 200 GiB, 214748364800 bytes, 419430400 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

[root@elk92 ~]# - 5.格式化并配置开机自动挂载

[root@elk92 ~]# mkfs.xfs /dev/rbd0

meta-data=/dev/rbd0 isize=512 agcount=16, agsize=3276800 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=1, sparse=1, rmapbt=0

= reflink=1 bigtime=0 inobtcount=0

data = bsize=4096 blocks=52428800, imaxpct=25

= sunit=16 swidth=16 blks

naming =version 2 bsize=4096 ascii-ci=0, ftype=1

log =internal log bsize=4096 blocks=25600, version=2

= sectsz=512 sunit=16 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

Discarding blocks...Done.

[root@elk92 ~]#

[root@elk92 ~]# cat /etc/rc.local

#!/bin/bash

rbd map violet/docker

mount /dev/rbd0 /violet/data/docker/

[root@elk92 ~]#

[root@elk92 ~]# chmod +x /etc/rc.local

[root@elk92 ~]#

[root@elk92 ~]# ll /etc/rc.local

-rwxr-xr-x 1 root root 53 Apr 2 16:23 /etc/rc.local*

[root@elk92 ~]# - 6.重启服务器

[root@elk92 ~]# reboot - 7.验证是否挂载成功

[root@elk92 ~]# rbd showmapped

id pool namespace image snap device

0 oldboyedu docker - /dev/rbd0

[root@elk92 ~]#

[root@elk92 ~]# df -h | grep data

/dev/rbd0 200G 1.5G 199G 1% /oldboyedu/data/docker

[root@elk92 ~]# - 8.迁移docker原有的数据

[root@elk92 ~]# df -h | egrep "ubuntu|data"

/dev/mapper/ubuntu--vg-ubuntu--lv 97G 42G 51G 45% /

/dev/rbd0 200G 1.5G 199G 1% /oldboyedu/data/docker

[root@elk92 ~]#

[root@elk92 ~]# systemctl stop docker # 如果有容器运行,建议手动停止容器(会自动卸载系统的overlayFS的挂载信息)后,再去停止服务的运行。

[root@elk92 ~]#

[root@elk92 ~]# du -sh /var/lib/docker/

25G /var/lib/docker/

[root@elk92 ~]#

[root@elk92 ~]# mv /var/lib/docker/* /violet/data/docker/ # 迁移数据完成

[root@elk92 ~]#

[root@elk92 ~]# df -h | egrep "ubuntu|data"

/dev/mapper/ubuntu--vg-ubuntu--lv 97G 25G 68G 27% /

/dev/rbd0 200G 26G 174G 13% /violet/data/docker

[root@elk92 ~]# - 9.修改docker的启动脚本并重启docker服务

[root@elk92 ~]# cat /lib/systemd/system/docker.service

[Unit]

Description=violet linux Docke Engine

Documentation=https://docs.docker.com,https://www.violet.com

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/dockerd --data-root /violet/data/docker

# 配置docker代理

Environment="HTTP_PROXY=http://10.0.0.1:7890"

Environment="HTTPS_PROXY=http://10.0.0.1:7890"

[Install]

WantedBy=multi-user.target

[root@elk92 ~]#

[root@elk92 ~]# systemctl daemon-reload

[root@elk92 ~]#

[root@elk92 ~]# systemctl restart docker

[root@elk92 ~]# - 10.验证docker的数据镜像是否迁移成功

[root@elk92 ~]# docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

10.0.0.91/violet-dockerfile/demo v0.2 dff87e693fb6 8 days ago 7.83MB

10.0.0.91/violet-dockerfile/demo v0.1 e0112d015dda 8 days ago 7.83MB

<none> <none> 52093c41b43b 9 days ago 287MB

demo v1.9 b0a781f408ba 9 days ago 19.7MB

demo v1.8 055ffcae9441 9 days ago 19.7MB

demo v1.7 fbed9a014d19 9 days ago 19.7MB

demo v1.6 48d79bd36436 9 days ago 19.7MB

<none> <none> 36deeac3b37e 9 days ago 19.7MB

<none> <none> ad0432a79a86 9 days ago 19.7MB

demo v1.5 4452f5c6118a 9 days ago 19.7MB

demo v1.4 36f36d2381f0 9 days ago 19.7MB

demo v1.3 c7bfa24c8c2d 9 days ago 18.5MB

demo v1.2 327082144555 9 days ago 287MB

demo v1.1 cbb8dac94df9 9 days ago 290MB

demo v1.0 cf47180c5142 9 days ago 290MB

mongo 8.0.6-noble b1d4ab2a0342 11 days ago 887MB

demo v0.6 50fba3763222 11 days ago 7.84MB

<none> <none> 4909fbdcfeda 11 days ago 7.84MB

<none> <none> 28555b3834d3 11 days ago 7.84MB

<none> <none> 5cf6720dffc0 11 days ago 7.84MB

<none> <none> 87b11265940c 11 days ago 7.83MB

<none> <none> e7e13e0002fe 11 days ago 7.83MB

<none> <none> c168e0a18e67 11 days ago 7.83MB

demo v0.5 67454ec9001c 11 days ago 7.84MB

demo v0.4 463ab10aca32 11 days ago 7.84MB

demo v0.3 493282b33bb3 12 days ago 7.84MB

demo v0.2 ebbfe1003640 12 days ago 7.84MB

<none> <none> d8ad6aeb0ac5 12 days ago 7.84MB

demo v0.1 b55f7262e3f7 12 days ago 7.84MB

<none> <none> 6013cfa87e61 12 days ago 7.83MB

10.0.0.93/violet-linux/violet-linux v2.15 7868472fa986 12 days ago 34.6MB

violet-linux v2.15 7868472fa986 12 days ago 34.6MB

<none> <none> 4c2a0125a7fa 12 days ago 34.6MB

<none> <none> d4130b03d2cb 12 days ago 34.6MB

<none> <none> d95dfd3fcf12 12 days ago 34.6MB

<none> <none> fdbd46f383b7 12 days ago 29.6MB

<none> <none> 948a47f76aec 12 days ago 29.6MB

violet-linux v2.13 191f43d0bd10 12 days ago 29.6MB

violet-linux v2.14 191f43d0bd10 12 days ago 29.6MB

violet-linux v2.12 e7e669d6b754 12 days ago 29.6MB

violet-linux v2.11 19710c3a6193 12 days ago 29.6MB

violet-linux v2.10 adb6d91d9366 12 days ago 42.7MB

violet-linux v2.9 da6a97b82d06 12 days ago 42.7MB

<none> <none> b00daf6e73c7 12 days ago 28.6MB

violet-linux v2.8 e05d1ec193be 12 days ago 15.6MB

violet-linux v2.7 db662b084782 12 days ago 15.6MB

violet-linux v2.6 17c625423dc1 12 days ago 15.6MB

violet-linux v2.5 df799a2d275d 12 days ago 15.6MB

violet-linux v2.4 f40797cfef10 12 days ago 15.6MB

violet-linux v2.3 8154a438fff1 12 days ago 15.6MB

violet-linux v2.2 b22e3c601e4b 12 days ago 15.6MB

violet-linux v2.1 9412abe4c610 12 days ago 15.6MB

violet-linux v2.0 b39d9de98cec 12 days ago 15.6MB

violet-linux v1.2 6d21180848aa 12 days ago 144MB

violet-linux v1.1 8d2995ba3491 12 days ago 144MB

violet-linux v1.0 4022564d25a1 12 days ago 146MB

violet-linux v0.3 cb2433559d05 12 days ago 248MB

violet-linux v0.2 9516678a3ba1 12 days ago 248MB

violet-linux v0.1 c5271db1632c 12 days ago 284MB

gcr.io/cadvisor/cadvisor-amd64 v0.52.1 de1f4a4d7753 13 days ago 80.7MB

ubuntu/squid latest 606c297474cf 13 days ago 243MB

zabbix/zabbix-web-nginx-mysql alpine-7.2-latest 0b4a2f1a0e3c 2 weeks ago 261MB

zabbix/zabbix-server-mysql alpine-7.2-latest 8f6e0ed5ae14 2 weeks ago 101MB

zabbix/zabbix-java-gateway alpine-7.2-latest 02d4e5dda618 2 weeks ago 194MB

docker.elastic.co/kibana/kibana 8.17.3 1800828c430b 4 weeks ago 1.18GB

docker.elastic.co/elasticsearch/elasticsearch 8.17.3 869b1daa5a9f 4 weeks ago 1.32GB

langgenius/dify-plugin-daemon 0.0.3-local 98e493768cf1 4 weeks ago 909MB

langgenius/dify-api 1.0.0 e75fe639e420 4 weeks ago 2.14GB

langgenius/dify-web 1.0.0 0325f9a1e172 4 weeks ago 472MB

postgres 15-alpine afbf3abf6aeb 4 weeks ago 273MB

10.0.0.93/violet-xixi/alpine 3.21.3 aded1e1a5b37 6 weeks ago 7.83MB

alpine 3.21.3 aded1e1a5b37 6 weeks ago 7.83MB

alpine latest aded1e1a5b37 6 weeks ago 7.83MB

alpine 3.19.7 13e536457b0c 6 weeks ago 7.4MB

alpine 3.18.12 802c91d52981 6 weeks ago 7.36MB

alpine 3.20.6 ff221270b9fb 6 weeks ago 7.8MB

nginx 1.27.4-alpine 1ff4bb4faebc 7 weeks ago 47.9MB

nginx latest 53a18edff809 7 weeks ago 192MB

ubuntu 24.04 a04dc4851cbc 2 months ago 78.1MB

ubuntu 22.04 a24be041d957 2 months ago 77.9MB

10.0.0.91/violet-haha/hello-world latest 74cc54e27dc4 2 months ago 10.1kB

hello-world latest 74cc54e27dc4 2 months ago 10.1kB

10.0.0.91/violet-haha/redis 6-alpine 6dd588768b9b 2 months ago 30.2MB

redis 6-alpine 6dd588768b9b 2 months ago 30.2MB

redis 7.4.2-alpine 8f5c54441eb9 2 months ago 41.4MB

wordpress 6.7.1-php8.1-apache 13ffff361078 4 months ago 700MB

langgenius/dify-sandbox 0.2.10 4328059557e8 5 months ago 567MB

mysql 8.0.36-oracle f5f171121fa3 12 months ago 603MB

violet007/violet-games v0.6 b55cbfca1946 12 months ago 376MB

registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps v1 f28fd43be4ad 14 months ago 23MB

registry 2.8.3 26b2eb03618e 18 months ago 25.4MB

inporl/violet-linux96 busybox 2d61ae04c2b8 22 months ago 4.27MB

busybox 1.36.1 2d61ae04c2b8 22 months ago 4.27MB

semitechnologies/weaviate 1.19.0 8ec9f084ab23 23 months ago 52.5MB

violet007/violet-linux-tools v0.1 da6fdb7c9168 3 years ago 5.65MB

centos 7.9.2009 eeb6ee3f44bd 3 years ago 204MB

centos 8.4.2105 5d0da3dc9764 3 years ago 231MB

centos centos8 5d0da3dc9764 3 years ago 231MB

python 2.7.18-alpine3.11 8579e446340f 4 years ago 71.1MB

[root@elk92 ~]# 彩蛋:基于配置文件的方式修改dockerd的启动参数

- 1.还原启动脚本

[root@elk92 ~]# cat /lib/systemd/system/docker.service

[Unit]

Description=violet linux Docke Engine

Documentation=https://docs.docker.com,https://www.vionlet.com

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/dockerd

# 配置docker代理

Environment="HTTP_PROXY=http://10.0.0.1:7890"

Environment="HTTPS_PROXY=http://10.0.0.1:7890"

[Install]

WantedBy=multi-user.target

[root@elk92 ~]#

[root@elk92 ~]# systemctl daemon-reload

[root@elk92 ~]#

[root@elk92 ~]# systemctl restart docker

[root@elk92 ~]#

[root@elk92 ~]# docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

[root@elk92 ~]#

[root@elk92 ~]# docker info | grep Root

Docker Root Dir: /var/lib/docker

[root@elk92 ~]# - 2.修改docker的配置文件

[root@elk92 ~]# cat /etc/docker/daemon.json

{

"insecure-registries": ["10.0.0.92:5000","10.0.0.93","10.0.0.91"],

"data-root": "/violet/data/docker"

}

[root@elk92 ~]#

[root@elk92 ~]# systemctl restart docker

[root@elk92 ~]#

[root@elk92 ~]# docker info | grep Root

Docker Root Dir: /violet/data/docker

[root@elk92 ~]#

[root@elk92 ~]#

[root@elk92 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

10.0.0.91/violet-dockerfile/demo v0.1 e0112d015dda 8 days ago 7.83MB

10.0.0.91/violet-dockerfile/demo v0.2 dff87e693fb6 8 days ago 7.83MB

<none> <none> 52093c41b43b 9 days ago 287MB

demo v1.9 b0a781f408ba 9 days ago 19.7MB

demo v1.8 055ffcae9441 9 days ago 19.7MB

demo v1.7 fbed9a014d19 9 days ago 19.7MB

demo v1.6 48d79bd36436 9 days ago 19.7MB

<none> <none> 36deeac3b37e 9 days ago 19.7MB

<none> <none> ad0432a79a86 9 days ago 19.7MB

demo v1.5 4452f5c6118a 9 days ago 19.7MB

demo v1.4 36f36d2381f0 9 days ago 19.7MB

demo v1.3 c7bfa24c8c2d 9 days ago 18.5MB

demo v1.2 327082144555 9 days ago 287MB

demo v1.1 cbb8dac94df9 9 days ago 290MB

demo v1.0 cf47180c5142 9 days ago 290MB

mongo 8.0.6-noble b1d4ab2a0342 11 days ago 887MB

demo v0.6 50fba3763222 11 days ago 7.84MB

<none> <none> 4909fbdcfeda 11 days ago 7.84MB

<none> <none> 28555b3834d3 11 days ago 7.84MB

<none> <none> 5cf6720dffc0 11 days ago 7.84MB

<none> <none> 87b11265940c 11 days ago 7.83MB

<none> <none> e7e13e0002fe 11 days ago 7.83MB

<none> <none> c168e0a18e67 11 days ago 7.83MB

demo v0.5 67454ec9001c 11 days ago 7.84MB

demo v0.4 463ab10aca32 11 days ago 7.84MB

demo v0.3 493282b33bb3 12 days ago 7.84MB

demo v0.2 ebbfe1003640 12 days ago 7.84MB

<none> <none> d8ad6aeb0ac5 12 days ago 7.84MB

demo v0.1 b55f7262e3f7 12 days ago 7.84MB

<none> <none> 6013cfa87e61 12 days ago 7.83MB

10.0.0.93/violet-linux/violet-linux96 v2.15 7868472fa986 12 days ago 34.6MB

violet-linux96 v2.15 7868472fa986 12 days ago 34.6MB

<none> <none> 4c2a0125a7fa 12 days ago 34.6MB

<none> <none> d4130b03d2cb 12 days ago 34.6MB

<none> <none> d95dfd3fcf12 12 days ago 34.6MB

<none> <none> fdbd46f383b7 12 days ago 29.6MB

<none> <none> 948a47f76aec 12 days ago 29.6MB

violet-linux96 v2.13 191f43d0bd10 12 days ago 29.6MB

violet-linux96 v2.14 191f43d0bd10 12 days ago 29.6MB

violet-linux96 v2.12 e7e669d6b754 12 days ago 29.6MB

violet-linux96 v2.11 19710c3a6193 12 days ago 29.6MB

violet-linux96 v2.10 adb6d91d9366 12 days ago 42.7MB

violet-linux96 v2.9 da6a97b82d06 12 days ago 42.7MB

<none> <none> b00daf6e73c7 12 days ago 28.6MB

violet-linux96 v2.8 e05d1ec193be 12 days ago 15.6MB

violet-linux96 v2.7 db662b084782 12 days ago 15.6MB

violet-linux96 v2.6 17c625423dc1 12 days ago 15.6MB

violet-linux96 v2.5 df799a2d275d 12 days ago 15.6MB

violet-linux96 v2.4 f40797cfef10 12 days ago 15.6MB

violet-linux96 v2.3 8154a438fff1 12 days ago 15.6MB

violet-linux96 v2.2 b22e3c601e4b 12 days ago 15.6MB

violet-linux96 v2.1 9412abe4c610 12 days ago 15.6MB

violet-linux96 v2.0 b39d9de98cec 12 days ago 15.6MB

violet-linux96 v1.2 6d21180848aa 12 days ago 144MB

violet-linux96 v1.1 8d2995ba3491 12 days ago 144MB

violet-linux96 v1.0 4022564d25a1 12 days ago 146MB

violet-linux96 v0.3 cb2433559d05 12 days ago 248MB

violet-linux96 v0.2 9516678a3ba1 12 days ago 248MB

violet-linux96 v0.1 c5271db1632c 12 days ago 284MB

gcr.io/cadvisor/cadvisor-amd64 v0.52.1 de1f4a4d7753 13 days ago 80.7MB

ubuntu/squid latest 606c297474cf 13 days ago 243MB

zabbix/zabbix-web-nginx-mysql alpine-7.2-latest 0b4a2f1a0e3c 2 weeks ago 261MB

zabbix/zabbix-server-mysql alpine-7.2-latest 8f6e0ed5ae14 2 weeks ago 101MB

zabbix/zabbix-java-gateway alpine-7.2-latest 02d4e5dda618 2 weeks ago 194MB

docker.elastic.co/kibana/kibana 8.17.3 1800828c430b 4 weeks ago 1.18GB

docker.elastic.co/elasticsearch/elasticsearch 8.17.3 869b1daa5a9f 4 weeks ago 1.32GB

langgenius/dify-plugin-daemon 0.0.3-local 98e493768cf1 4 weeks ago 909MB

langgenius/dify-api 1.0.0 e75fe639e420 4 weeks ago 2.14GB

langgenius/dify-web 1.0.0 0325f9a1e172 4 weeks ago 472MB

postgres 15-alpine afbf3abf6aeb 4 weeks ago 273MB

10.0.0.93/violet-xixi/alpine 3.21.3 aded1e1a5b37 6 weeks ago 7.83MB

alpine 3.21.3 aded1e1a5b37 6 weeks ago 7.83MB

alpine latest aded1e1a5b37 6 weeks ago 7.83MB

alpine 3.19.7 13e536457b0c 6 weeks ago 7.4MB

alpine 3.18.12 802c91d52981 6 weeks ago 7.36MB

alpine 3.20.6 ff221270b9fb 6 weeks ago 7.8MB

nginx latest 53a18edff809 7 weeks ago 192MB

nginx 1.27.4-alpine 1ff4bb4faebc 7 weeks ago 47.9MB

ubuntu 24.04 a04dc4851cbc 2 months ago 78.1MB

ubuntu 22.04 a24be041d957 2 months ago 77.9MB

10.0.0.91/violet-haha/hello-world latest 74cc54e27dc4 2 months ago 10.1kB

hello-world latest 74cc54e27dc4 2 months ago 10.1kB

10.0.0.91/violet-haha/redis 6-alpine 6dd588768b9b 2 months ago 30.2MB

redis 6-alpine 6dd588768b9b 2 months ago 30.2MB

redis 7.4.2-alpine 8f5c54441eb9 2 months ago 41.4MB

wordpress 6.7.1-php8.1-apache 13ffff361078 4 months ago 700MB

langgenius/dify-sandbox 0.2.10 4328059557e8 5 months ago 567MB

mysql 8.0.36-oracle f5f171121fa3 12 months ago 603MB

violet007/violet-games v0.6 b55cbfca1946 12 months ago 376MB

registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps v1 f28fd43be4ad 14 months ago 23MB

registry 2.8.3 26b2eb03618e 18 months ago 25.4MB

inporl/violet-linux96 busybox 2d61ae04c2b8 22 months ago 4.27MB

busybox 1.36.1 2d61ae04c2b8 22 months ago 4.27MB

semitechnologies/weaviate 1.19.0 8ec9f084ab23 23 months ago 52.5MB

violet007/violet-linux-tools v0.1 da6fdb7c9168 3 years ago 5.65MB

centos 7.9.2009 eeb6ee3f44bd 3 years ago 204MB

centos 8.4.2105 5d0da3dc9764 3 years ago 231MB

centos centos8 5d0da3dc9764 3 years ago 231MB

python 2.7.18-alpine3.11 8579e446340f 4 years ago 71.1MB

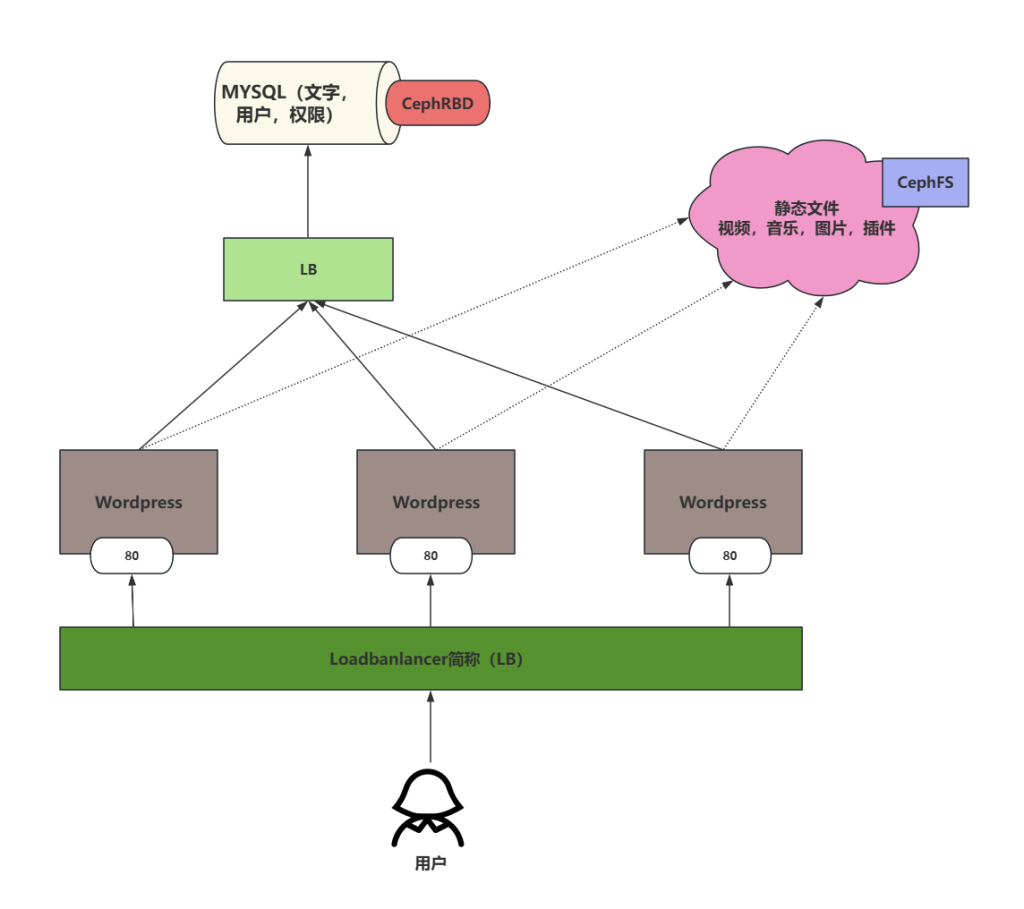

[root@elk92 ~]# wordpress结合ceph项目架构

- 1.wordpress结合ceph项目架构说明

- 2.docker启动MySQL

2.1 ceph集群创建块设备

[root@ceph141 ~]# rbd create -s 100G violet/wordpress-db

[root@ceph141 ~]#

[root@ceph141 ~]# rbd info violet/wordpress-db

rbd image 'wordpress-db':

size 100 GiB in 25600 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: e00a2fd83cf5

block_name_prefix: rbd_data.e00a2fd83cf5

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

op_features:

flags:

create_timestamp: Wed Apr 2 17:06:28 2025

access_timestamp: Wed Apr 2 17:06:28 2025

modify_timestamp: Wed Apr 2 17:06:28 2025

[root@ceph141 ~]#

2.2 客户端开机挂载

[root@elk92 ~]# rbd map violet/wordpress-db

/dev/rbd1

[root@elk92 ~]#

[root@elk92 ~]# rbd showmapped

id pool namespace image snap device

0 oldboyedu docker - /dev/rbd0

1 oldboyedu wordpress-db - /dev/rbd1

[root@elk92 ~]#

[root@elk92 ~]# fdisk -l /dev/rbd1

Disk /dev/rbd1: 100 GiB, 107374182400 bytes, 209715200 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

[root@elk92 ~]#

[root@elk92 ~]# mkfs.xfs /dev/rbd1

meta-data=/dev/rbd1 isize=512 agcount=16, agsize=1638400 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=1, sparse=1, rmapbt=0

= reflink=1 bigtime=0 inobtcount=0

data = bsize=4096 blocks=26214400, imaxpct=25

= sunit=16 swidth=16 blks

naming =version 2 bsize=4096 ascii-ci=0, ftype=1

log =internal log bsize=4096 blocks=12800, version=2

= sectsz=512 sunit=16 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

Discarding blocks...Done.

[root@elk92 ~]#

[root@elk92 ~]# mkdir -pv /wordpress/db

mkdir: created directory '/wordpress'

mkdir: created directory '/wordpress/db'

[root@elk92 ~]#

[root@elk92 ~]# mount /dev/rbd1 /wordpress/db

[root@elk92 ~]#

[root@elk92 ~]# df -h | grep wordpress

/dev/rbd1 100G 747M 100G 1% /wordpress/db

[root@elk92 ~]#

2.3 启动MySQL数据库

[root@elk92 ~]# docker run -d --name wordpress-db-server \

-e MYSQL_DATABASE=wordpress \

-e MYSQL_ALLOW_EMPTY_PASSWORD=yes \

-e MYSQL_USER=admin \

-e MYSQL_PASSWORD=123456 \

-v /wordpress/db:/var/lib/mysql \

--network host \

--restart always \

mysql:8.0.36-oracle

[root@elk92 ~]# docker ps -l

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

4f3f8b78e4ec mysql:8.0.36-oracle "docker-entrypoint.s…" 8 seconds ago Up 8 seconds wordpress-db-server

[root@elk92 ~]#

[root@elk92 ~]# ss -ntl | grep 3306

LISTEN 0 151 *:3306 *:*

LISTEN 0 70 *:33060 *:*

[root@elk92 ~]# - 3.docker启动wordpress

3.1 WordPress节点安装ceph环境

[root@elk91 ~]# apt -y install ceph-common

[root@elk92 ~]# apt -y install ceph-common

[root@elk93 ~]# apt -y install ceph-common

3.2 服务端准备认证文件相关信息到WordPress节点

[root@ceph141 ~]# scp /etc/ceph/ceph.{client.admin.keyring,conf} 10.0.0.91:/etc/ceph

[root@ceph141 ~]# scp /etc/ceph/ceph.{client.admin.keyring,conf} 10.0.0.92:/etc/ceph

[root@ceph141 ~]# scp /etc/ceph/ceph.{client.admin.keyring,conf} 10.0.0.93:/etc/ceph

3.3 客户端开机挂载cephFS文件系统【请确保CephFS集群中是存在/linux96目录的,其中secret的值也要自行修改】

mkdir -p /wordpress/wp

mount -t ceph 10.0.0.141:6789,10.0.0.142:6789,10.0.0.143:6789:/linux96 /wordpress/wp -o name=admin,secret=AQAkRepnl8QHDhAAajK/aMH1KaCoVJWt5H2NOQ==

df | grep wordpress

3.4 启动wordpress

docker run -d --name wordpress-wp-server \

-e WORDPRESS_DB_HOST=10.0.0.92:3306 \

-e WORDPRESS_DB_USER=admin \

-e WORDPRESS_DB_PASSWORD=123456 \

-e WORDPRESS_DB_NAME=wordpress \

-v /wordpress/wp:/var/www/html \

-p 90:80 \

--restart always \

wordpress:6.7.1-php8.1-apache

ss -ntl | grep 90

3.5 访问测试

http://10.0.0.92:90/

3.6 其他节点启动wordpress

可能会遇到的报错

Q1:

[root@ceph141 ~]# rbd snap limit set laonanhai/ubuntu-2404-lts --limit 3

rbd: setting snapshot limit failed: (34) Numerical result out of range

2025-04-02T10:03:59.709+0800 7fbdadbb2640 -1 librbd::SnapshotLimitRequest: encountered error: (34) Numerical result out of range

[root@ceph141 ~]#

解决方案:

当前快照数量已经超过预期的快照忽略,应该调大快照数量的上限。

Q2:

[root@ceph141 ~]# rbd snap create laonanhai/ubuntu-2404-lts@haha

Creating snap: 10% complete...failed.

rbd: failed to create snapshot: (122) Disk quota exceeded

[root@ceph141 ~]#

解决方案:

当前快照数量超过预期的数量,练习管理员调大快照数量限制。

Q3:

[root@prometheus-server31 ~]# df -h | grep rbd3

/dev/rbd3 976K 40K 868K 5% /violet/ubuntu

[root@prometheus-server31 ~]#

[root@prometheus-server31 ~]# cp /etc/passwd /violet/ubuntu/

cp: cannot create regular file '/violet/ubuntu/passwd': No space left on device

[root@prometheus-server31 ~]#

解决方案:

要么存储空间不足,要么服务端已经删除了对应的块设备,或存储池。

Q4:

[root@prometheus-server31 ~]# mount -t ceph ceph141:6789,ceph142:6789,ceph143:6789:/ /data -o name=linux96,secret=AQCz2+xnI9UVHxAAlU1w/7Yp5MGhya4CwyP0tA==

did not load config file, using default settings.

2025-04-02T14:46:45.472+0800 7fcc8caf6f40 -1 Errors while parsing config file!

2025-04-02T14:46:45.472+0800 7fcc8caf6f40 -1 can't open ceph.conf: (2) No such file or directory

2025-04-02T14:46:45.472+0800 7fcc8caf6f40 -1 Errors while parsing config file!

2025-04-02T14:46:45.472+0800 7fcc8caf6f40 -1 can't open ceph.conf: (2) No such file or directory

unable to get monitor info from DNS SRV with service name: ceph-mon

2025-04-02T14:46:45.496+0800 7fcc8caf6f40 -1 failed for service _ceph-mon._tcp

[root@prometheus-server31 ~]#

解决方案:

缺少ceph的配置文件。将"ceph.conf"拷贝到当前节点即可。

Q5:

[root@prometheus-server31 ~]# mount -t ceph ceph141:6789,ceph142:6789,ceph143:6789:/ /data -o name=linux96,secret=AQCz2+xnI9UVHxAAlU1w/7Yp5MGhya4CwyP0tA==

2025-04-02T14:48:35.136+0800 7fcaf21bbf40 -1 auth: unable to find a keyring on /etc/ceph/ceph.client.linux96.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin: (2) No such file or directory

[root@prometheus-server31 ~]#

解决方案:

缺少"linux96"用户的认证文件。可以拷贝过来,不拷贝也没有关系,因为这个只是告警信息。